Why was I up at 00:30 reading an ECMA spec from 1970 and a report from the Union Carbide Corp Nuclear Division? Because I found an old geeky bracelet from our summer cottage and of course I had to decipher it.

My first thought was naturally binary ASCII. A back-of-my-head consideration showed it had potential, but there were strange values interspersed (for example control character 0x10 DLE which I have never heard of). ASCII conversion produced “2yh#” – not very promising. EBCDIC didn’t yield any better result.

Then something stirred in my head, and I realized from the line of small dots that it’s of course punch tape. That lead me to read up on punch tape formats, including the ECMA-10 standard for Data Interchange on Punched Tape from 1970. The problem was that practically all of the standards defined ASCII as the character encoding, which was already ruled out.

However, it did contain the next clue: The ECMA-10 standard defines that the rows include a parity bit, meaning that each row contains an even number of holes (i.e. even parity). The bracelet contained an odd number of “holes” on each row – therefore I had to search for a punch tape standard that used odd parity.

The decisive clue came from a post on a CNC machining forum. It referred to EIA/RS-244 coded tape – often used in CNC machines – having an odd number of holes on every row. Googling that took me to the Union Carbide Corp Nuclear Division report “Punched-tape code and format for numerically controller machines” from 1969.

On page 9 it contains the conversion from punched holes to letters and numbers. I was ecstatic when I started getting letter after letter from the code.

So what does it say? “CHED TAPE. THIS ” The first word might be truncated from “PUNCHED TAPE”. I’m unsure what “this” and the space indicate. Perhaps that was all that fit on the bracelet, or maybe there was a pair where the message continued?

EDIT: I originally decoded it as CHAD TAPE. THIS where chad tape is punched tape used in telegraphy/teletypewriter operation (chad being the paper fragments coming from punching holes in the tape). As John noted in the comments, the third letter is actually an E instead of A.

My father-in-law was unsure of the bracelet’s origin, but thought it might have been made as a nostalgia piece in the 1980’s in the University of Helsinki physics department where punch tape had been in common use.

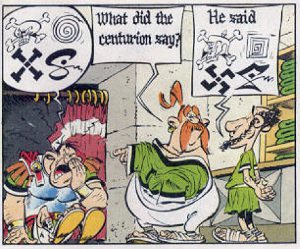

Sometimes you just gotta swear, but don’t have the words for it. This is where

Sometimes you just gotta swear, but don’t have the words for it. This is where